What is VR?

Virtual Reality (VR), which can be referred to as immersive multimedia or computer-simulated life, replicates an environment that simulates physical presence in places in the real world or imagined worlds.

Most up to date virtual reality environments are displayed either on a computer screen or with special stereoscopic displays, and some simulations include additional sensory information and focus on real sound through speakers or headphones targeted towards VR users.

The simulated environment can be similar to the real world in order to create a lifelike experience – for example, in simulations for pilot or combat training – or it differs significantly from reality, such as in VR games. In practice, it is currently very difficult to create a high-fidelity virtual reality experience, because of technical limitations on processing power, image resolution, and communication bandwidth. However, VR’s proponents hope that virtual reality’s enabling technologies become more powerful and cost effective over time.

What is immersive video and how does it relate to VR?

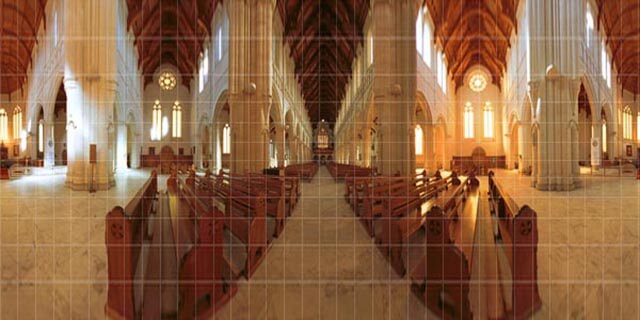

An immersive video is basically a video recording of a real world scene, where the view in every direction is recorded at the same time. During playback the viewer has control of the viewing direction, up, down and sideways. Generally the only area that can’t be viewed is the view toward the camera support. The material is recorded as data which when played back through a software player allows the user control of the viewing direction and playback speed.

The player control is typically via a mouse or other sensing device and the playback view is typically a window on a computer display or projection screen or other presentation device such as a head mounted display (HMD).

Because of the use of HMDs you could say immersive videos are another form of virtual reality.

How do you represent immersive video?

There are many forms of storing an immersive video. The most commonly used is the equirectangular projection, which is a spherical projection. We used the spherical projection because that is the easiest way to view a full environment.

A spherical projection is based on a spherical model,

x= \lambda cos \varphi_{1}

y= \varphi

where

\lambda is the longitude

\varphi is the latitude

\varphi_{1} are the standard parallels (north and south of the equator) where the scale of the projection is true

x is the horizontal position along the map

y is the vertical position along the map.

The point (0,0) is at the center of the resulting projection.

Other projection types include:

Cylindrical projection

Mercator Projection

Little Planet Projection

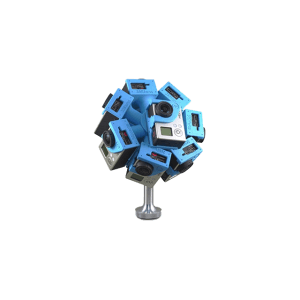

How do you record immersive videos?

This area is still under active development, people still trying to figure out the best way. However, here are some commonly used methods.

The process of stitching what you record

After you get the videos you first need some meta information, specifically the position of each shot relative to the rig, in degrees. For example, video file mov1235.avi corresponds to horizontal offset of 45 degrees and vertical offset of 90 degrees, roll (camera is rotated on its lens axis) 90 degrees. For our algorithm (that supports any number of cameras) this was essential in placing the shots in space.

Next we will give a general overview of our algorithm (for a single frame):

First, we get a frame from each video, and their associated meta information. Next, we process the frames by undistorting and rotating (the roll parameter) them. Afterwards, in sequence, we virtually project each frame on the interior of a sphere, compositing each one over the other, compensating for exposure difference.

Note that the edges of the frame have an opacity falloff (feather). If any parts of the sphere are left unprojected (projection is transparent), we refine the result by recompositing the current equirectangular projection over a blurred version of itself, creating a smoother background, and a nicer transition to black.

Now we will go in depth for each step.

Undistortion:

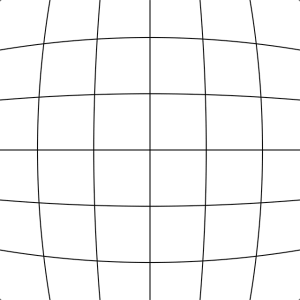

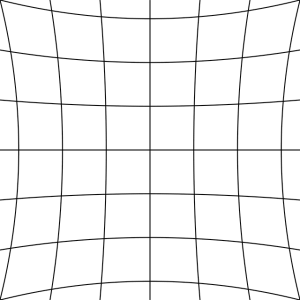

Before we explain what undistortion is, we must first understand what distortion is. Because lenses are not perfect objects, they introduce many artifacts, one of them being distortion.

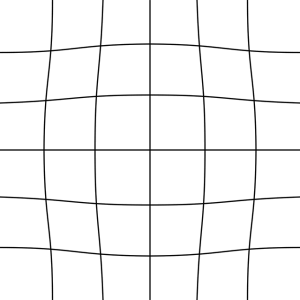

There are 3 main types of distortion: barrel, pincushion and mustache (a combination of the previous 2).

Barrel distortion

Pincushion distortion

Mustache distortion

Therefore, undistortion is the process of compensating for the lens distortion artifact. In our particular case, we compensated only for barrel distortion.

Positioning on the sphere:

We calculate the equirectangular projection of the frame basing its position on the coordinates calculated from the meta information (horizontal, vertical offsets), after being converted to a spherical coordinate system. This is a quite complex mathematical operation which we will not go into.

Assuming that in the resulting equirectangular map some content exists, we now do the following: create an intersection map (1 bit image), based on this map and the previous alpha maps of each component of the intersection we calculate a ‘hole’ map and blur it. We then stencil through with our intersection map.

We now calculate a LUT (lookup table) to apply to the current frame to compensate for exposure difference.

We then merge over this result with the alpha of the previously created projection and we composite it with the existing equirectangular map. We merge by calculating the result of the following equation for each pixel in the region of interest:

A \times aA + B \times (1 {-} aA)

where

A is the foreground pixel

B is the background pixel

a is the alpha (opacity) of the foreground pixel.

Challenges, issues and others

The main challenge is performance, because multiple HD video files need to be processed and this is very time consuming. Also, the hardware requirements for viewing immersive videos in a good quality is very steep. This is because when you are viewing through your viewport, you’re seeing just a portion of the video that is basically scaled up. So, for a 1080p viewport, you actually need a 16k x 8k source video, for actual maximum quality.

Another challenge is synchronizing the videos so you don’t get a wobbly feel to the video, and get the same people in different places (due to the time offset).

References:

- //en.wikipedia.org/wiki/Virtual_reality

- //en.wikipedia.org/wiki/Equirectangular_projection

- //en.wikipedia.org/wiki/Distortion_(optics)

Image sources:

- //www2.iath.virginia.edu/panorama/section1.html

- //www.lemis.com/

- //www.roadtovr.com/

- //www.vrfilms.com/

- //www.dizzyview.com.au/panoramas.html

- //nikonrumors.com

Authors:

Ștefan-Gabriel Gavrilaș

Ionuț Popovici